-

Table of Contents

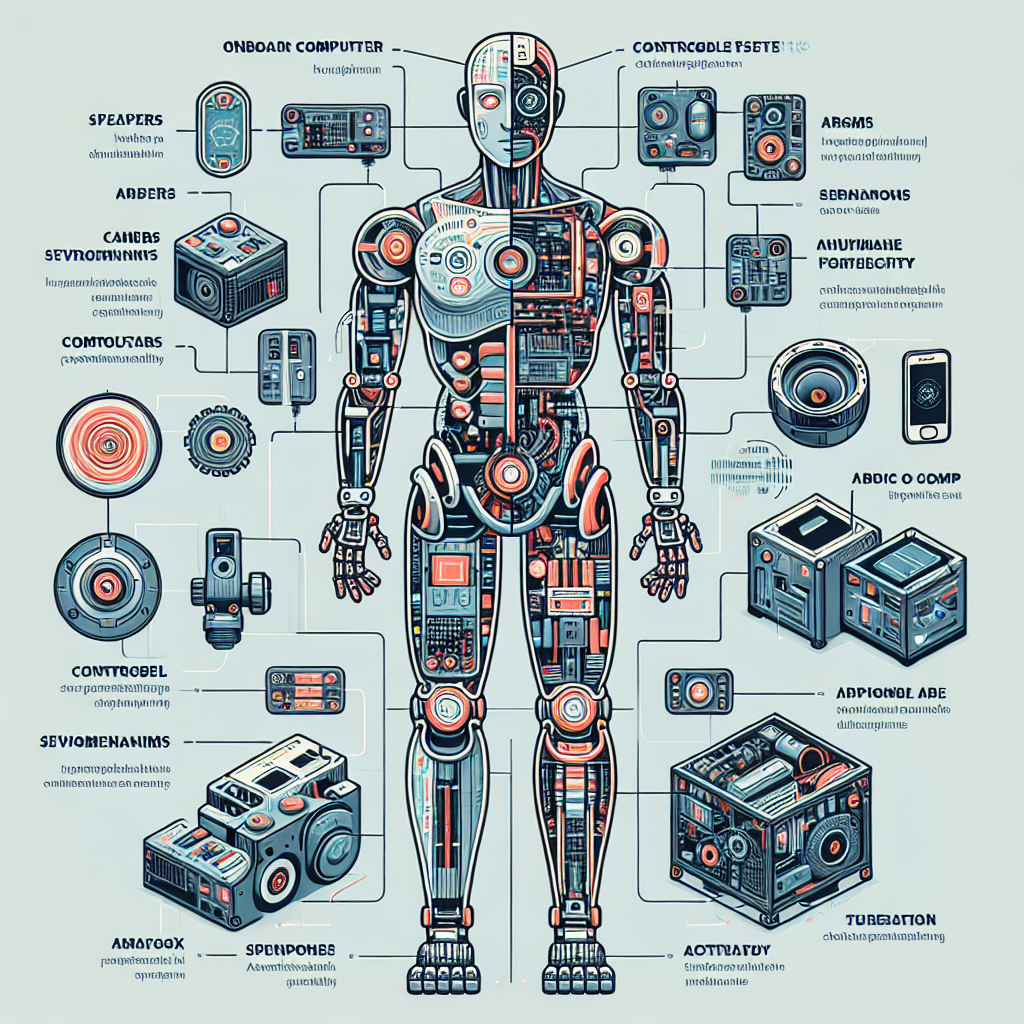

“Empowering the Future: The Essential Core Components of Autonomous Robots.”

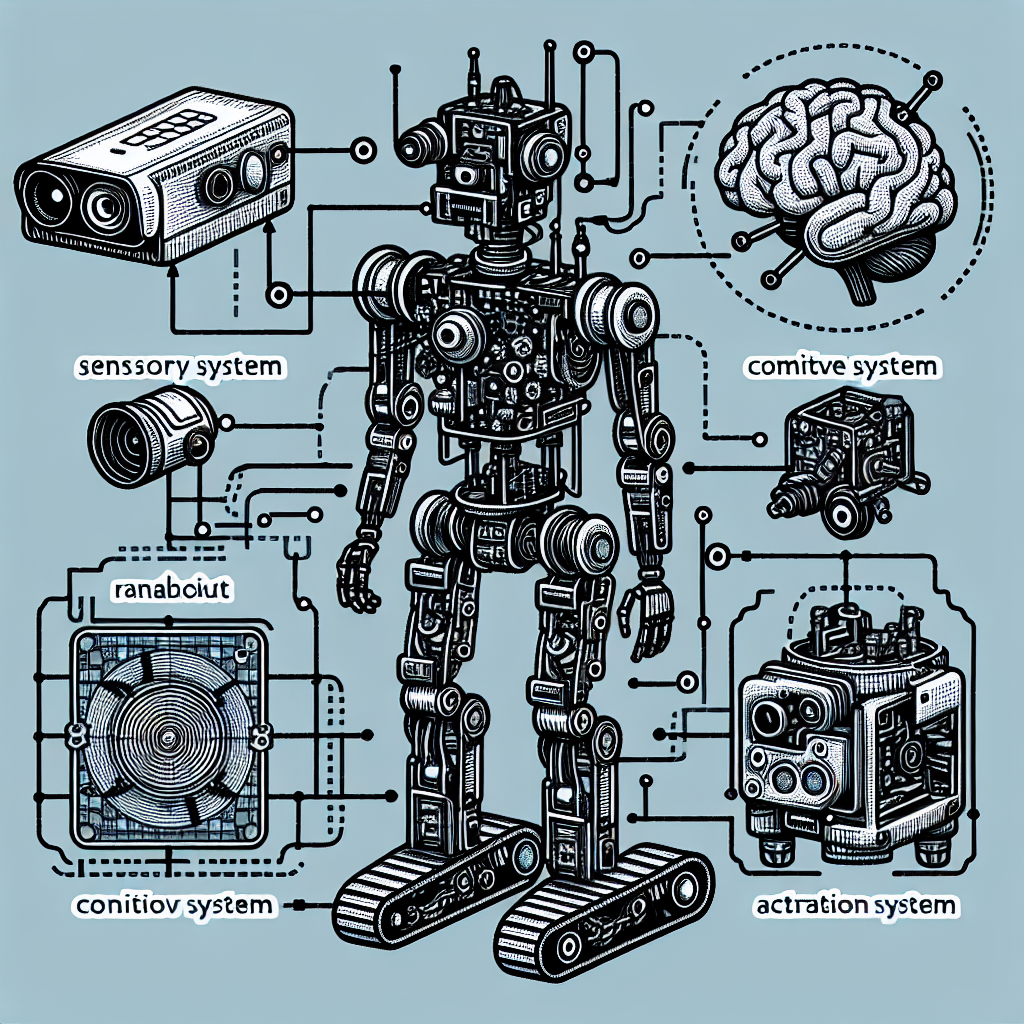

Autonomous robots are complex systems designed to perform tasks without human intervention, relying on a combination of advanced technologies and core components. These components include sensors, which gather data from the environment; actuators, which enable movement and manipulation; processing units, which analyze data and make decisions; and communication systems, which facilitate interaction with other devices and systems. Together, these elements enable robots to navigate, perceive their surroundings, and execute tasks efficiently and effectively, paving the way for applications across various industries, from manufacturing to healthcare and beyond.

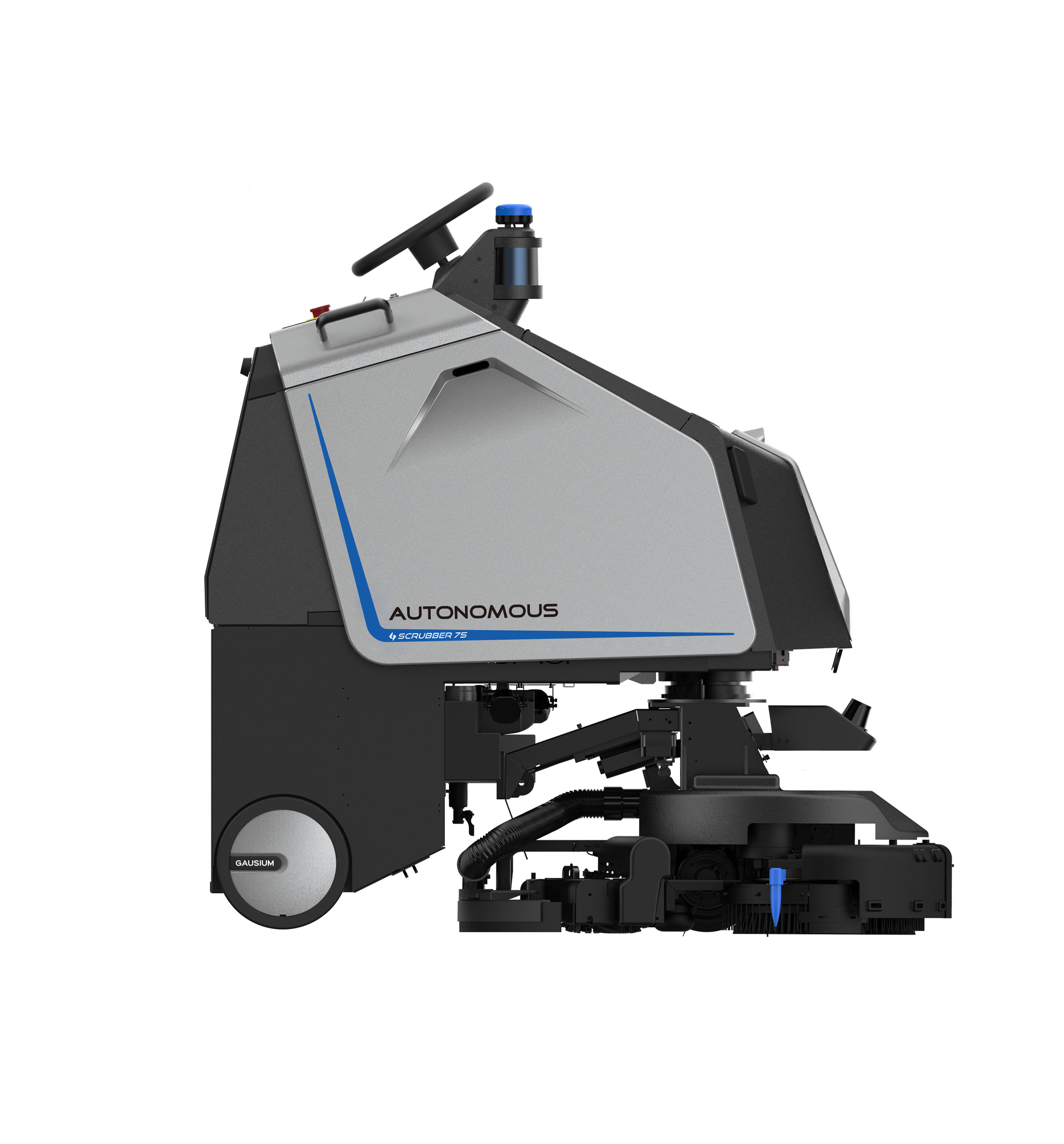

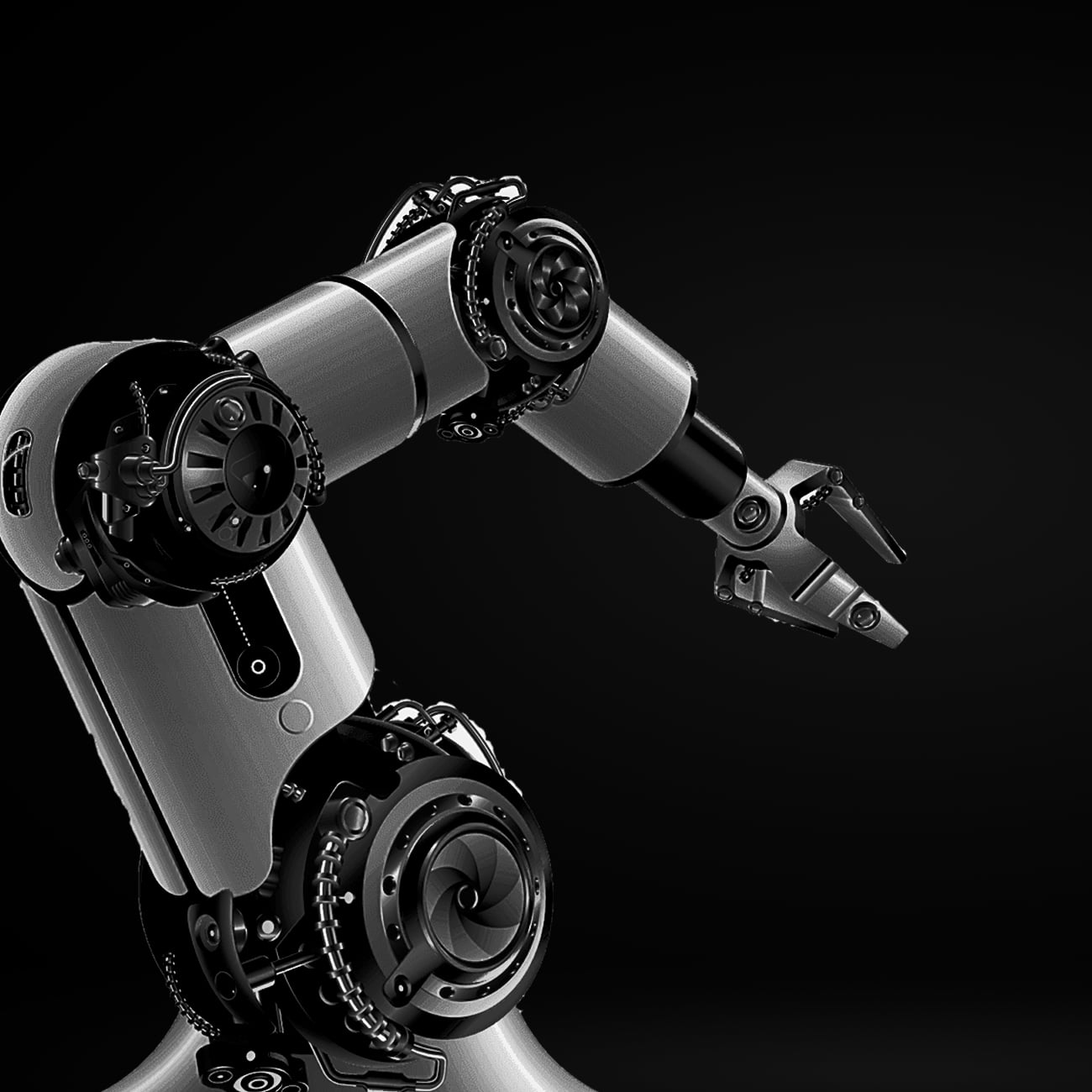

Actuation Mechanisms in Robotics

In the rapidly evolving field of robotics, actuation mechanisms play a pivotal role in determining the functionality and efficiency of autonomous robots. These mechanisms are responsible for converting energy into motion, enabling robots to perform a wide array of tasks, from simple movements to complex operations in dynamic environments. Understanding the various actuation mechanisms is essential for engineers and developers aiming to design robots that can operate effectively in real-world scenarios.

One of the most common actuation mechanisms is the electric motor, which is widely used due to its reliability and ease of control. Electric motors can be found in various forms, including brushed and brushless designs, each offering distinct advantages. Brushed motors are typically simpler and more cost-effective, making them suitable for applications where precision is less critical. In contrast, brushless motors provide higher efficiency and longer lifespans, making them ideal for applications requiring sustained performance. As a result, the choice of motor type often depends on the specific requirements of the robotic application, such as speed, torque, and operational environment.

In addition to electric motors, pneumatic actuators are another significant component in the realm of robotics. These actuators utilize compressed air to create motion, offering unique advantages in terms of speed and force. Pneumatic systems are particularly beneficial in applications where lightweight and rapid movements are essential, such as in robotic arms used in assembly lines. However, the reliance on compressed air can introduce challenges related to energy efficiency and system complexity. Therefore, engineers must carefully consider the trade-offs when integrating pneumatic actuators into their designs.

Similarly, hydraulic actuators provide an alternative approach to actuation, utilizing pressurized fluid to generate motion. These systems are known for their ability to produce high force outputs, making them suitable for heavy-duty applications such as construction and industrial robots. However, hydraulic systems can be cumbersome and require significant maintenance, which may limit their use in smaller or more agile robotic platforms. As such, the choice between pneumatic and hydraulic actuation often hinges on the specific demands of the task at hand.

Moreover, advancements in materials science have led to the development of soft actuators, which mimic the flexibility and adaptability of biological systems. These actuators, often made from elastomers or other compliant materials, enable robots to navigate complex environments and interact safely with humans. Soft robotics is an emerging field that holds great promise for applications in healthcare, search and rescue, and other areas where traditional rigid robots may struggle. The integration of soft actuators into robotic systems represents a significant shift in design philosophy, emphasizing adaptability and safety.

As the field of robotics continues to advance, the exploration of novel actuation mechanisms remains a critical area of research. Innovations such as shape memory alloys and electroactive polymers are being investigated for their potential to create more efficient and versatile actuation systems. These emerging technologies could revolutionize the way robots are designed and operated, enabling them to perform tasks that were previously thought to be impossible.

In conclusion, actuation mechanisms are fundamental to the performance and capabilities of autonomous robots. By understanding the strengths and limitations of various actuation technologies, engineers can make informed decisions that enhance the functionality and efficiency of robotic systems. As the industry progresses, the ongoing exploration of new actuation methods will undoubtedly lead to more sophisticated and capable autonomous robots, paving the way for their integration into an increasingly diverse range of applications.

Navigation Algorithms for Autonomous Systems

In the rapidly evolving field of autonomous robotics, navigation algorithms play a pivotal role in enabling systems to operate effectively in dynamic environments. These algorithms are essential for ensuring that robots can perceive their surroundings, make informed decisions, and execute tasks with precision. As the demand for autonomous systems increases across various industries, understanding the intricacies of navigation algorithms becomes crucial for developers and businesses alike.

At the heart of navigation algorithms lies the ability to process sensory data. Autonomous robots are equipped with a variety of sensors, including cameras, LIDAR, and ultrasonic sensors, which gather information about the environment. This sensory input is then transformed into actionable data through sophisticated algorithms that interpret the robot’s position and orientation. For instance, simultaneous localization and mapping (SLAM) is a widely used technique that allows robots to create a map of an unknown environment while simultaneously keeping track of their location within that map. By integrating data from multiple sensors, SLAM enhances the robot’s ability to navigate complex spaces, making it invaluable for applications ranging from warehouse automation to autonomous vehicles.

Moreover, the choice of navigation algorithm can significantly impact a robot’s efficiency and effectiveness. Path planning algorithms, such as A* and Dijkstra’s algorithm, are designed to determine the most efficient route from a starting point to a destination while avoiding obstacles. These algorithms utilize graph-based representations of the environment, allowing robots to calculate optimal paths in real-time. As a result, businesses can benefit from reduced operational costs and improved productivity, as robots can navigate more swiftly and safely through their designated tasks.

In addition to path planning, real-time obstacle avoidance is another critical component of navigation algorithms. As robots operate in unpredictable environments, they must be able to adapt to sudden changes, such as moving obstacles or unexpected terrain. Reactive navigation strategies, which rely on immediate sensory feedback, enable robots to make quick adjustments to their paths. By employing techniques such as potential fields or dynamic window approaches, robots can navigate around obstacles while maintaining their overall trajectory. This adaptability is essential for applications in crowded spaces, such as hospitals or retail environments, where the presence of people and objects can vary significantly.

Furthermore, the integration of artificial intelligence and machine learning into navigation algorithms is revolutionizing the capabilities of autonomous systems. By leveraging large datasets, robots can learn from past experiences and improve their navigation strategies over time. This continuous learning process allows for enhanced decision-making, enabling robots to handle more complex tasks and environments. For instance, reinforcement learning techniques can be employed to optimize navigation strategies based on trial and error, leading to more efficient and effective navigation over time.

As the landscape of autonomous robotics continues to advance, the importance of robust navigation algorithms cannot be overstated. These algorithms not only facilitate the safe and efficient movement of robots but also enhance their ability to interact with the environment and perform tasks autonomously. Consequently, businesses that invest in developing and refining navigation algorithms will be better positioned to leverage the full potential of autonomous systems. In conclusion, as industries increasingly adopt autonomous technologies, the ongoing evolution of navigation algorithms will remain a cornerstone of innovation, driving efficiency and transforming operational capabilities across various sectors.

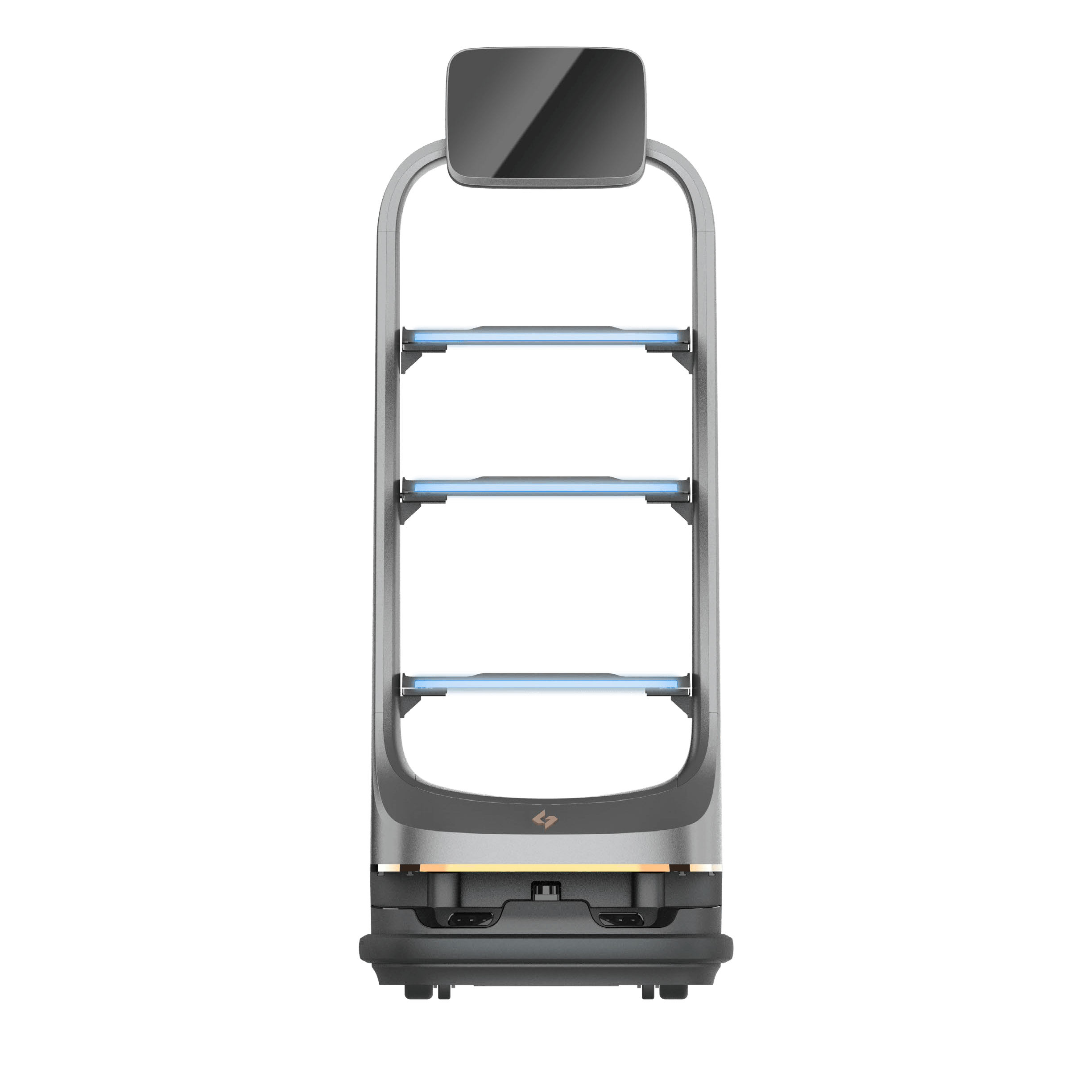

Sensor Integration in Autonomous Robots

In the rapidly evolving field of robotics, sensor integration stands as a cornerstone of autonomous robot functionality. As these machines are increasingly deployed in diverse environments, from manufacturing floors to remote exploration sites, the ability to perceive and interpret their surroundings becomes paramount. The integration of various sensors allows autonomous robots to gather critical data, enabling them to make informed decisions and execute tasks with precision.

At the heart of sensor integration is the need for a multi-faceted approach. Autonomous robots typically employ a combination of sensors, including cameras, LiDAR, ultrasonic sensors, and inertial measurement units (IMUs). Each type of sensor contributes unique capabilities, enhancing the robot’s overall perception. For instance, cameras provide rich visual information, allowing robots to recognize objects, navigate complex environments, and even interpret human gestures. Meanwhile, LiDAR sensors offer precise distance measurements, creating detailed 3D maps of surroundings, which are essential for obstacle avoidance and path planning.

Moreover, the integration of these sensors is not merely about collecting data; it is also about synthesizing that information to create a coherent understanding of the environment. This process, often referred to as sensor fusion, combines data from multiple sources to improve accuracy and reliability. For example, by merging data from cameras and LiDAR, a robot can achieve a more comprehensive view of its surroundings, compensating for the limitations of individual sensors. This enhanced perception is crucial for tasks that require high levels of precision, such as autonomous driving or robotic surgery.

In addition to improving situational awareness, effective sensor integration also plays a vital role in the robot’s ability to adapt to dynamic environments. Autonomous robots must navigate spaces that are often unpredictable, filled with moving objects and varying conditions. By continuously processing data from their sensors, these robots can adjust their actions in real-time, ensuring safety and efficiency. For instance, a delivery robot equipped with both cameras and ultrasonic sensors can detect pedestrians and obstacles, allowing it to alter its path seamlessly without human intervention.

Furthermore, the advancement of artificial intelligence (AI) and machine learning algorithms has significantly enhanced the capabilities of sensor integration in autonomous robots. These technologies enable robots to learn from their experiences, improving their decision-making processes over time. As robots encounter new scenarios, they can analyze sensor data to refine their understanding and responses, leading to greater autonomy and effectiveness in their operations.

However, the integration of sensors also presents challenges that must be addressed. Issues such as data overload, sensor calibration, and environmental interference can complicate the process. To mitigate these challenges, engineers and developers must focus on creating robust algorithms that can filter and prioritize sensor data, ensuring that the most relevant information is utilized for decision-making. Additionally, ongoing research into new sensor technologies and integration techniques continues to push the boundaries of what autonomous robots can achieve.

In conclusion, sensor integration is a fundamental aspect of autonomous robots, enabling them to perceive, interpret, and interact with their environments effectively. By leveraging a diverse array of sensors and employing advanced data fusion techniques, these robots can navigate complex scenarios with agility and precision. As technology continues to advance, the potential for autonomous robots to transform industries and enhance human capabilities will only grow, underscoring the importance of continued innovation in sensor integration.

Q&A

1. **Question:** What are the primary sensors used in autonomous robots?

**Answer:** The primary sensors include LIDAR, cameras, ultrasonic sensors, and IMUs (Inertial Measurement Units).

2. **Question:** What role does the control system play in autonomous robots?

**Answer:** The control system processes sensor data and makes decisions to navigate and perform tasks, ensuring the robot operates effectively in its environment.

3. **Question:** How do autonomous robots achieve navigation and mapping?

**Answer:** Autonomous robots use algorithms like SLAM (Simultaneous Localization and Mapping) to create maps of their environment while tracking their own location within that map. The core components of autonomous robots include sensors for environmental perception, actuators for movement and manipulation, a processing unit for decision-making and control, and software algorithms for navigation and task execution. Together, these elements enable robots to operate independently, adapt to dynamic environments, and perform complex tasks with minimal human intervention. The integration and optimization of these components are crucial for enhancing the efficiency, reliability, and functionality of autonomous robotic systems.